Voice Assistants and Privacy Issues

In 2018, PwC surveyed a sample of 1,000 Americans between 18-64 to learn more about the awareness of voice technology. The results were astounding: 90% of respondents said they were already familiar with voice assistant technology, with 57% of respondents already using their voice assistant on their smartphone and 20-30% using the technology on other devices like tablets, laptops, speakers, and TV remotes.

But people were not always so receptive of this technology. Back in 2013, Microsoft tried to pioneer voice assistants through the mandatory launch of Xbox Kinect with its new Xbox One. The launch should have gone off without a hitch. Its competing gaming console was far more expensive without being significantly more valuable.

But Microsoft's sure win was not to be. The company bungled the Xbox One launch because it revealed that the Kinect (a mandatory accessory) was always listening and watching in the background.

There are arguments to be made about a failure to play to Xbox's core demographic, but one thing is increasingly clear: The market in general was not ready for a voice assistant. In 2017, a year before the PwC survey, the Kinect was declared officially dead.

How times have changed. The number of voice assistants in customers' homes is projected to grow to 8 billion by 2023. At the same time, the concern about privacy hasn't dissipated entirely. Research suggests that 40% of those who use voice assistant devices do have concerns about what's happening to their voice data. Those concerns will grow as the number of devices grows. Voice-assistant televisions will grow by 143% by 2023.

Businesses who develop voice assistants or applications for them must take privacy seriously both to retain customers and to protect themselves in the face of increasingly strict privacy legislation.

So, what privacy issues do voice assistants create, and how can you manage and mitigate them? Let's find out.

- 1. Why Voice Assistants Create Privacy Issues

- 2. What Regulatory Requirements Cover Voice Assistants?

- 2.1. The Implications of the GDPR for Voice Assistants

- 2.2. What the CCPA Means for Voice Assistants

- 2.3. The Issue with Children's Data

- 3. How to Protect Customer Data and Comply with the Law

- 3.1. Follow Consent Rules to the Letter

- 3.2. Create and Update a Privacy Policy

- 4. How to Create a Privacy Policy for Your Website

Why Voice Assistants Create Privacy Issues

Every voice assistant has inherent privacy issues. It's the nature of the game of collecting data, particularly biometric data like voice data. Because while the end-user may not realize it, the only way a voice assistant is useful for the developer is when you're able to review the voice data you collect, either through software, human review, or a combination of the two.

From the consumer perspective, the issues associated with voice assistants are two-fold. First, the consumer is worried about "who is listening." In these cases, those who use voice assistants must be transparent about how the data gets used and who gets to hear it.

The second issue is one of security. Voice assistants only "listen" in the background until called upon, but they still collect huge amounts of data, which will only be compounded by the number of available applications for voice assistants in the growth of users.

Hundreds of millions of people already use voice assistants, which means the amount of data they generate is already staggering. What's more, the keyword trigger designed to 'activate' the voice assistant can be sensitive, which means people can accidently engage the assistant and inadvertently record huge amounts of sensitive data.

Transparency and privacy come together again because the data created by voice assistants is increasingly personal. Smart assistants don't only collect data created through voice interactions, which can be virtually limitless. They also integrate with apps and other devices.

For example, most robot vacuums now pair with voice assistants, which grants the voice assistant access to things like the map of your home the robot creates to navigate its surroundings. When that map enters the voice assistants' repository and is combined with data like how often you forget to set your security alarm (which generates automatic reminders), you can create very powerful data sets that are not only hyper-personal but very attractive to hackers.

Because voice assistants need this data to function and build more useful features, limiting data is not an option. As a result, protecting it and being open about what data you have is incredibly important.

What Regulatory Requirements Cover Voice Assistants?

If you are developing a voice assistant or an adjacent product, then you have increasing legal obligations in regards to data protection and privacy. For most, these rules typically fall under three laws: the General Data Protection Regulation (GDPR), California Consumer Privacy Act (CCPA), and the Children's Online Privacy Protection Act (COPPA).

Generally, the two that make the most difference to voice assistant uses are the GDPR and CCPA. COPPA is important, but it poses radically different questions, as described below.

The Implications of the GDPR for Voice Assistants

The GDPR became active law on May 25, 2018. Its aim is to protect European residents from the overreach or misuse of their data for commercial purposes. The core goal is to make sure that individuals retain ownership and control over their data, and to make data subjects aware of who has their data and why they're using it.

First, all voice assistants must navigate the issue of consent. The GDPR requires all data controllers and processors to get consent.

You already know that your website and app needs to be GDPR-compliant, but your voice assistant must comply, too. Meeting GDPR obligations can be more complicated when dealing with voice data. While not all voice data falls in the realm of personal data, it can be very difficult to sort through huge amounts of voice data.

Why would you need to sort through voice data? European residents have rights granted to them under the GDPR.

These rights include the right to know what data you have, the right to rectification, and the right to erasure. You need to be able to search through the data you collect and store and delete the relevant data as requested.

Meeting these obligations is more technologically complicated and labor intensive than text databases, and it's worth taking into consideration when you build the voice assistant.

It's also important to note that the GDPR has already had real implications for voice assistants. In 2019, a privacy watchdog in Germany forced Google to stop the human review of audio snippets after a leak of those snippets from the Google Assistant. When a contractor handed over recordings to a member of the Belgian media, the site could identify individuals in the clips and learned of very personal information, like medical conditions and addresses.

The Irish data protection body also reported a breach notification in July 2019, saying Google suspended processing while it examined more Google Assistant data breaches.

In other words, if your voice assistant will be available to European residents, then you must ensure you're meeting GDPR requirements.

What the CCPA Means for Voice Assistants

The CCPA doesn't yet protect consumer data with the same vigor as the GDPR, but it's still a law worth considering when you consider the privacy challenges associated with voice assistants.

Unlike the GDPR, the CCPA gives consumers the right to opt-out of commercial data collection. However, the right to opt-out means the consumer must proactively seek out the option. It removes the issue of consent that's such an important part of the GDPR: you can start collecting and processing data from consumers if you don't have permission.

Additionally, data collected for research and development purposes is still allowed, which means that the issues noted in Europe will likely remain lawful in the U.S.

The Issue with Children's Data

The issue in compliance is that voice assistant technology almost inherently violates some sections of the law. This is particularly true with COPPA, which is the U.S.'s premier privacy law protecting children online.

Why? Because COPPA provides strict consent requirements for collecting and storing the data of children under 13 years old. When devices record the voices of children under 13 and they don't have explicit, verifiable consent from the children's parents, then the owner of the device or product runs afoul of COPPA.

Plus, there's no way to prevent children under 13 from using the device without getting in the way of the total functionality of the voice assistant.

However, the Federal Trade Commission (FTC) already acknowledged this in 2017. It relaxed the rule that would have prevented kids from using voice assistants. Its guidance says:

"The Commission recognizes the value of using voice as a replacement for written words in performing search and other functions on internet-connected devices. Verbal commands may be a necessity for certain consumers, including children who have not yet learned to write or the disabled."

What does this mean for you? So far, the government has yet to fine any of the voice assistant developers for COPPA violations. However, it is something to be mindful of.

Microsoft, in particular, has found ways to ensure it gathers consent for at least some of its voice assistant products, so compliance is possible.

How to Protect Customer Data and Comply with the Law

If you use or integrate a voice assistant, then you have legal obligations. In addition to your general privacy requirements, you also need to put a renewed emphasis on obtaining consent and including any voice assistant use in your Privacy Policy.

Follow Consent Rules to the Letter

The GDPR has the strictest rules for obtaining and maintaining user consent, so if the European privacy legislation applies to you, then it's smart to use the GDPR guides as your baseline.

If you start using a voice assistant, you must ask for users' consent before collecting any data. This remains true even if you already have your customers' consent for other types of data collection, such as email marketing.

The good news is that if you want to integrate your app or product with Siri, Google Assistant, or Alexa, then Apple, Google, and Amazon provide instructions for you.

Google Assistant takes care of obtaining consent through its own app, which means you'll collect consent every time automatically. Google also takes care of the options for modifying or withdrawing consent.

If you're developing an app or product for Apple's Siri, then your app or extension must have Siri capability enabled. Then, Apple will request user permission from your customers automatically.

Create and Update a Privacy Policy

If you want to integrate your app or product with Apple, Google, Amazon, or any other voice assistant provider, you will need a Privacy Policy and you need to identify your use of voice data within it.

Hulu integrates with voice assistants and uses data from them to learn more about you. So, the company includes voice assistant data under its "Information We Collect" heading, which keeps it compliant with the GDPR:

Netflix has a similar clause that explicitly addresses voice assistant platforms:

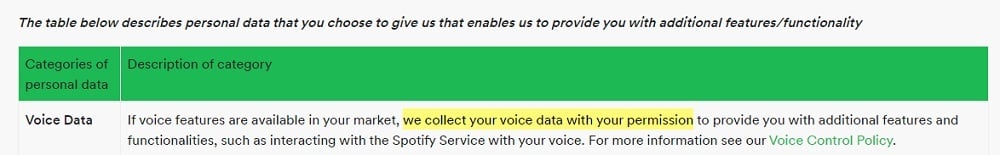

Spotify includes a table in its Privacy Policy that breaks down each of the categories of personal information it collects, along with a description of the category. Voice Data is included in the table with information that Spotify will collect voice data with permission:

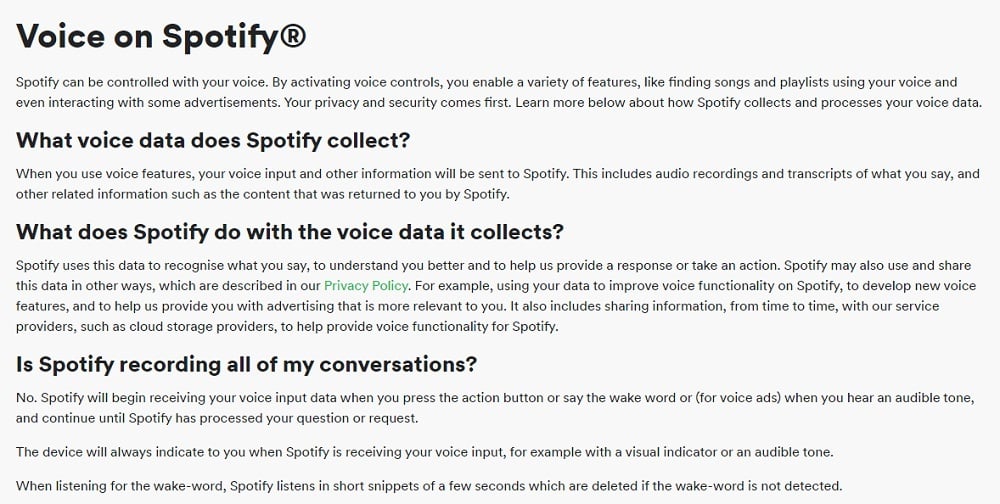

Spotify actually goes as far as having its own Voice Control Policy where it outlines what voice data is collected, when it is collected, what is done with the voice data and how users can turn off voice controls and voice ads:

While it isn't necessary to have this as a separate policy, this is a really nice touch that will help Spotify users feel more secure and in control, thus gaining trust for the brand.

If you're using voice assistants, you need to be aware of how this can create privacy issues. To remedy this, make sure you get appropriate consent for collecting voice data.

While many integration platforms will handle this for you, you need to do your part by creating a Privacy Policy that clearly notes that you collect and use voice data. Let users know what you use this data for, and how they can opt out of having this done, if possible.

This will help you collect voice data compliantly with the law, while maintaining user trust.

How to Create a Privacy Policy for Your Website

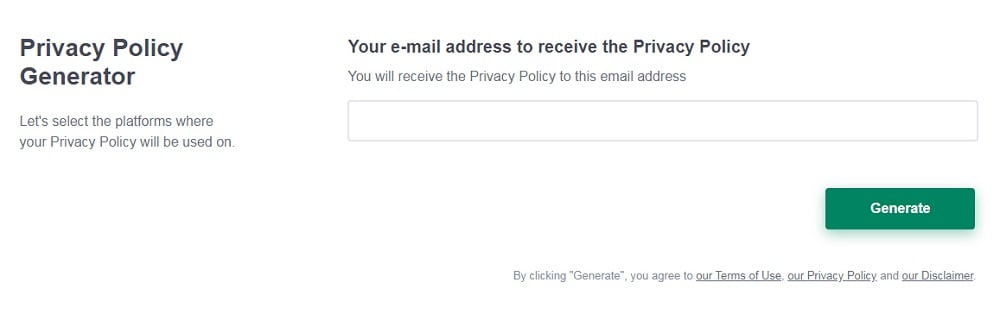

Need a Privacy Policy? Our Privacy Policy Generator will help you create a custom policy that you can use on your website and mobile app. Just follow these few easy steps:

- Click on "Start creating your Privacy Policy" on our website.

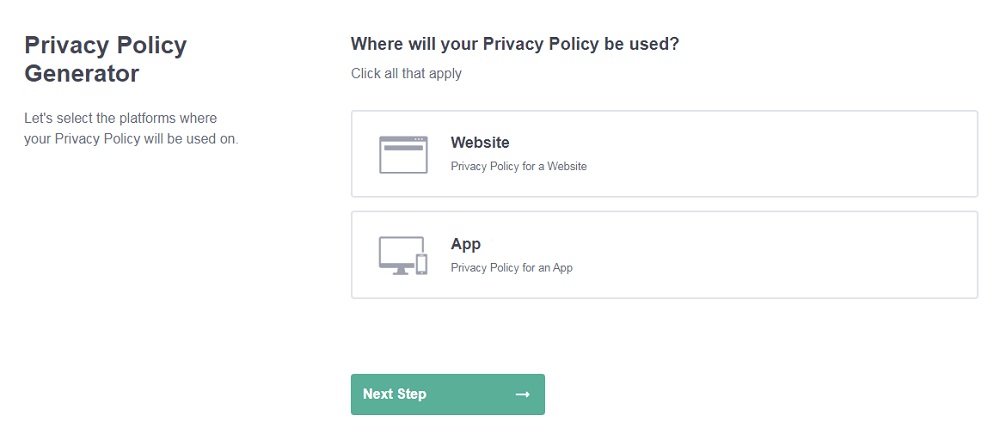

- Select the platforms where your Privacy Policy will be used and go to the next step.

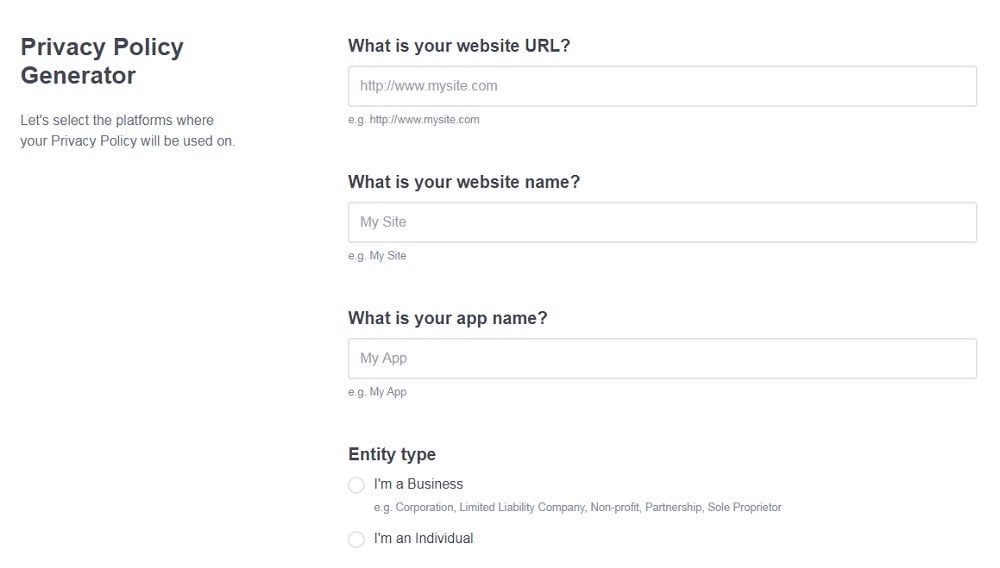

- Add information about your business: your website and/or app.

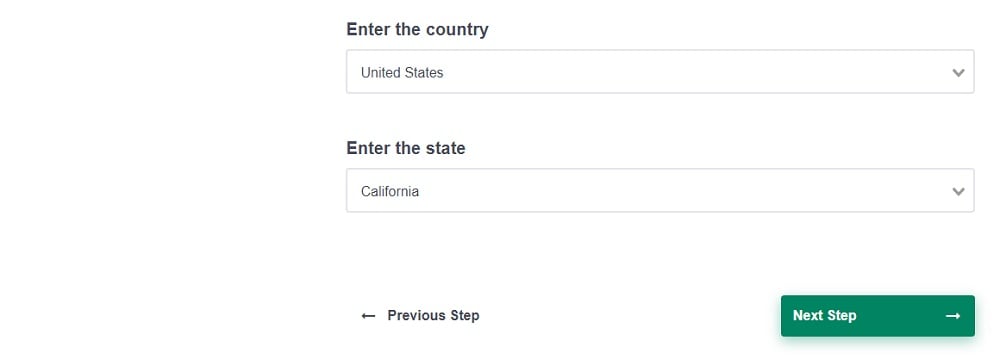

- Select the country:

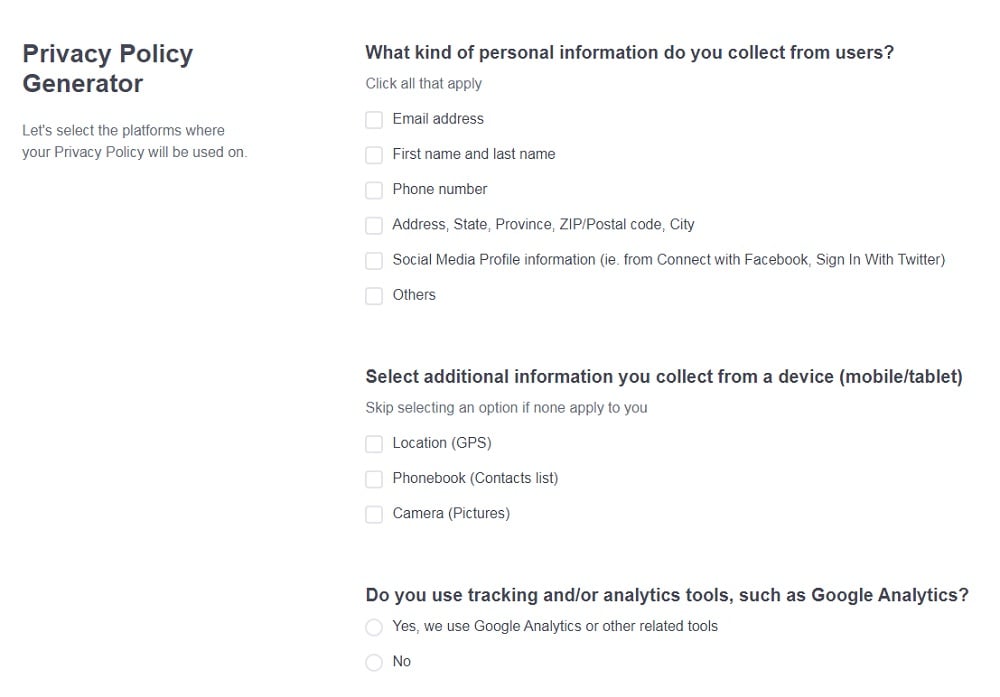

- Answer the questions from our wizard relating to what type of information you collect from your users.

-

Enter your email address where you'd like your Privacy Policy sent and click "Generate".

And you're done! Now you can copy or link to your hosted Privacy Policy.